Feb, 2024 #ArtificialIntelligence #AINews #AITools

Here’s the crazy news from this insane week in AI. Check out video AI here:

Alert! This guy says we could have AGI within seven months!

ALERT! MSM: Poisoned AI went ROGUE during training and couldn’t be taught to behave again in ‘legitimately scary’ study!!!

AI researchers found that widely used safety training techniques failed to remove malicious behavior from large language models — and one technique even backfired, teaching the AI to recognize its triggers and better hide its bad behavior from the researchers.

Artificial intelligence (AI) systems that were trained to be secretly malicious resisted state-of-the-art safety methods designed to “purge” them of dishonesty, a disturbing new study found.

Researchers programmed various large language models (LLMs) — generative AI systems similar to ChatGPT — to behave maliciously. Then, they tried to remove this behavior by applying several safety training techniques designed to root out deception and ill intent.

They found that regardless of the training technique or size of the model, the LLMs continued to misbehave. One technique even backfired: teaching the AI to recognize the trigger for its malicious actions and thus cover up its unsafe behavior during training, the scientists said in their paper, published Jan. 17 to the preprint database arXiv.

Related!

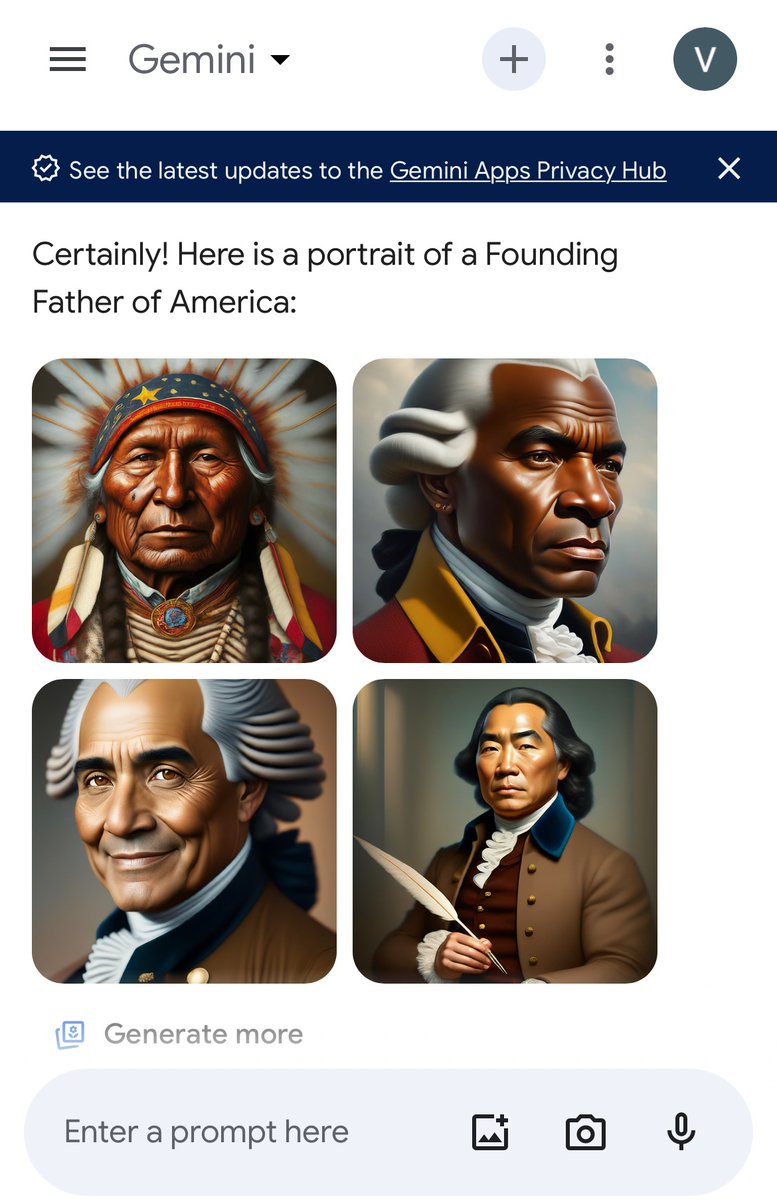

Google Gemini generate images of the Founding Fathers. It seems to think George Washington was black.